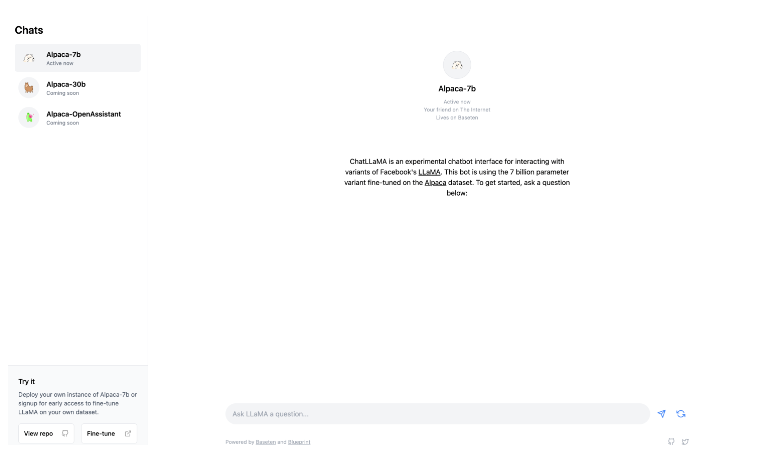

For Facebook’s recently announced LLaMA, a collection of massive language models with sizes ranging from 7 to 65 billion parameters, ChatLLaMA offers a cutting-edge chatbot interface.

The performance of LLaMA is commendable despite its reduced size; for instance, its 13B model outperforms GPT-3 while being 10 times smaller. This performance provides consumers with faster inference performance that is comparable to chatGPT, but it is more affordable and can run on a single GPU. The 7 billion-parameter version of LLaMA, which is optimised using the Alpaca dataset, is the one used by ChatLLaMA. It’s important to keep in mind, nevertheless, that LLaMA has not been refined using a Reinforcement Learning from Human Feedback (RLHF) training procedure.

User objects: Developers, chatbot enthusiasts, researchers, businesses seeking chatbot solutions, and users interested in AI-driven communication.

>>> We invite you to use the latest ChatGPT Demo Free in 2024

DEMO

Similar Apps

Socratic by Google

OpenChatKit

Open assistant

Jasper Chat

FreedomGPT