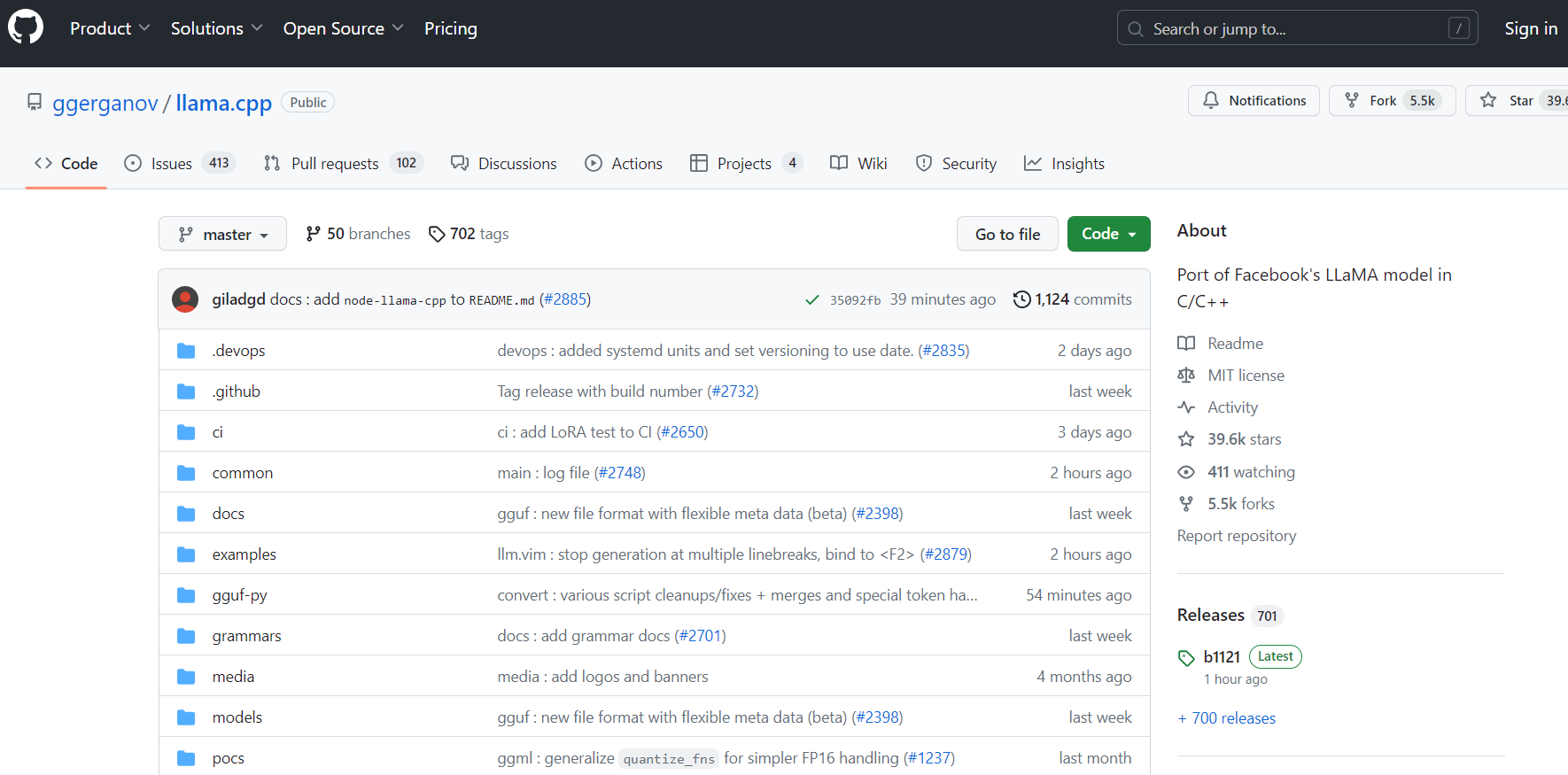

“llama.cpp” is a port of Facebook’s LLaMA model created specifically for efficient inference into the C/C++ programming languages. Its ability to run the model using 4-bit quantization, focusing on performance on MacBooks, is its standout feature.

The platform is flexible and supports a variety of operating systems, including Docker, Mac OS, Linux, and Windows (via CMake). Additionally, it supports a wide variety of models, such as Vicuna, LLaMA, Alpaca, GPT4All, and versions of LLaMA and Alpaca in Chinese, as well as Vigogne in French. “llama.cpp” offers effective LLaMA model operations across various systems with a focus on performance optimisation for developers and tech enthusiasts.

User objects:

- Software developers

- ML engineers

- AI researchers

- Systems architects

- NLP practitioners

- Tech enthusiasts

- Platform-specific app developers (Mac OS, Linux, Windows)

- Docker users

- Multilingual AI model developers

- Performance optimization experts.

Video: https://youtu.be/_mEu9QSeaZ4

DEMO

Similar Apps

Replicate | Discover AI use cases

PyTorch 2.0

Chatgpt Android

TryChroma

Trudo AI