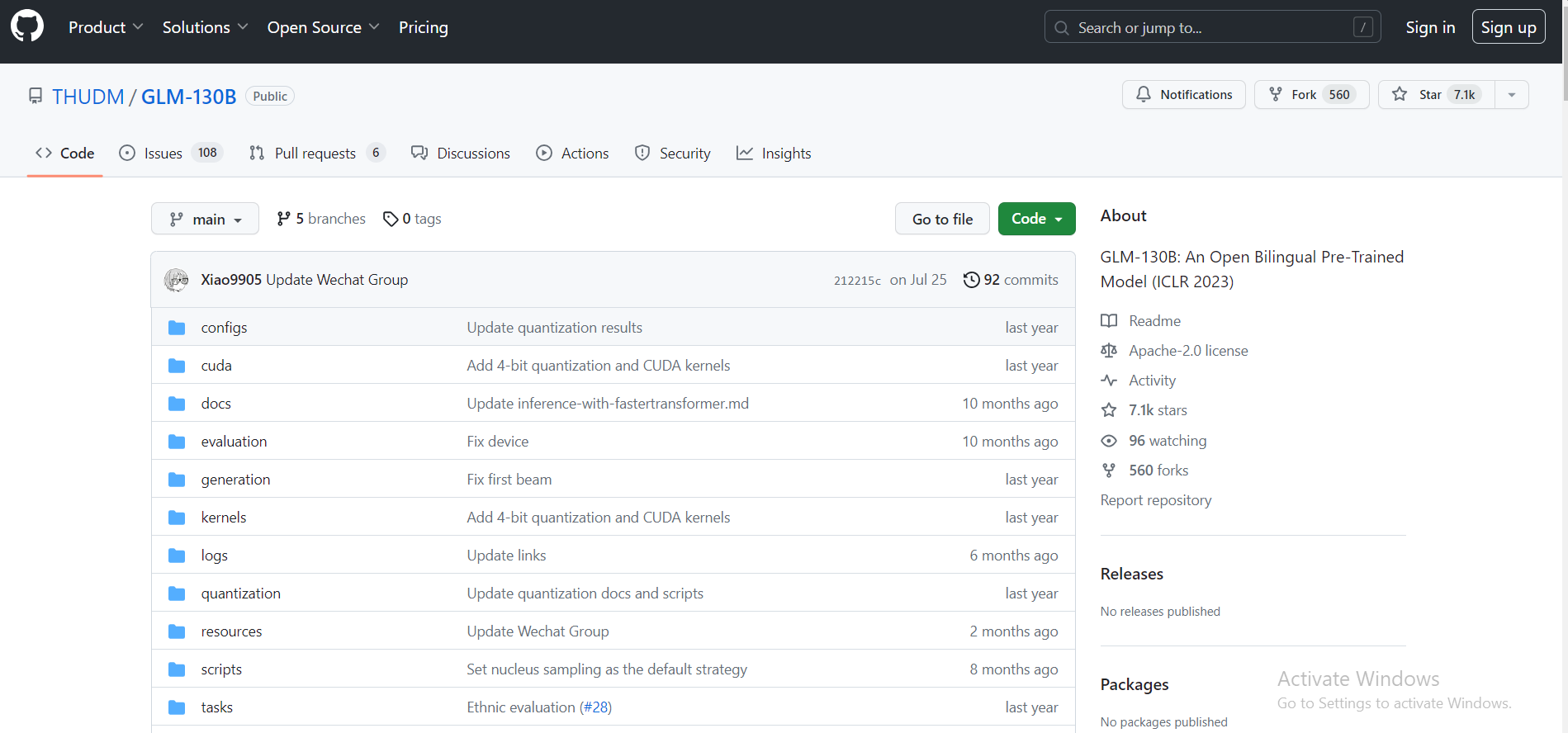

The robust bilingual (English and Chinese) model GLM-130B has 130 billion parameters trained on 400 billion text tokens, evenly split between the two languages. It runs efficiently on specific server configurations and has almost no performance degradation with INT4 quantization. The model outperforms GPT-3 175B and ERNIE TITAN 3.0 in various tasks due to its bilingualism. With certain hardware, it offers faster inference rates and reproducibility with open-source resources. The versatile platform supports NVIDIA, Hygon DCU, Ascend 910, and soon Sunway.

User objects:

– Researchers

– Data scientists

– NLP professionals

– Linguists studying bilingualism

– Content creators

– Businesses operating in English and Chinese markets

– Developers integrating language models

– Cross-platform developers

>>> Use Chat GPT Demo with OpenAI’s equivalent smart bot experience

DEMO

Similar Apps

Openai Codex

nanoGPT minGPT

Muse

MT NLG by Microsoft and Nvidia AI

DeepMind RETRO