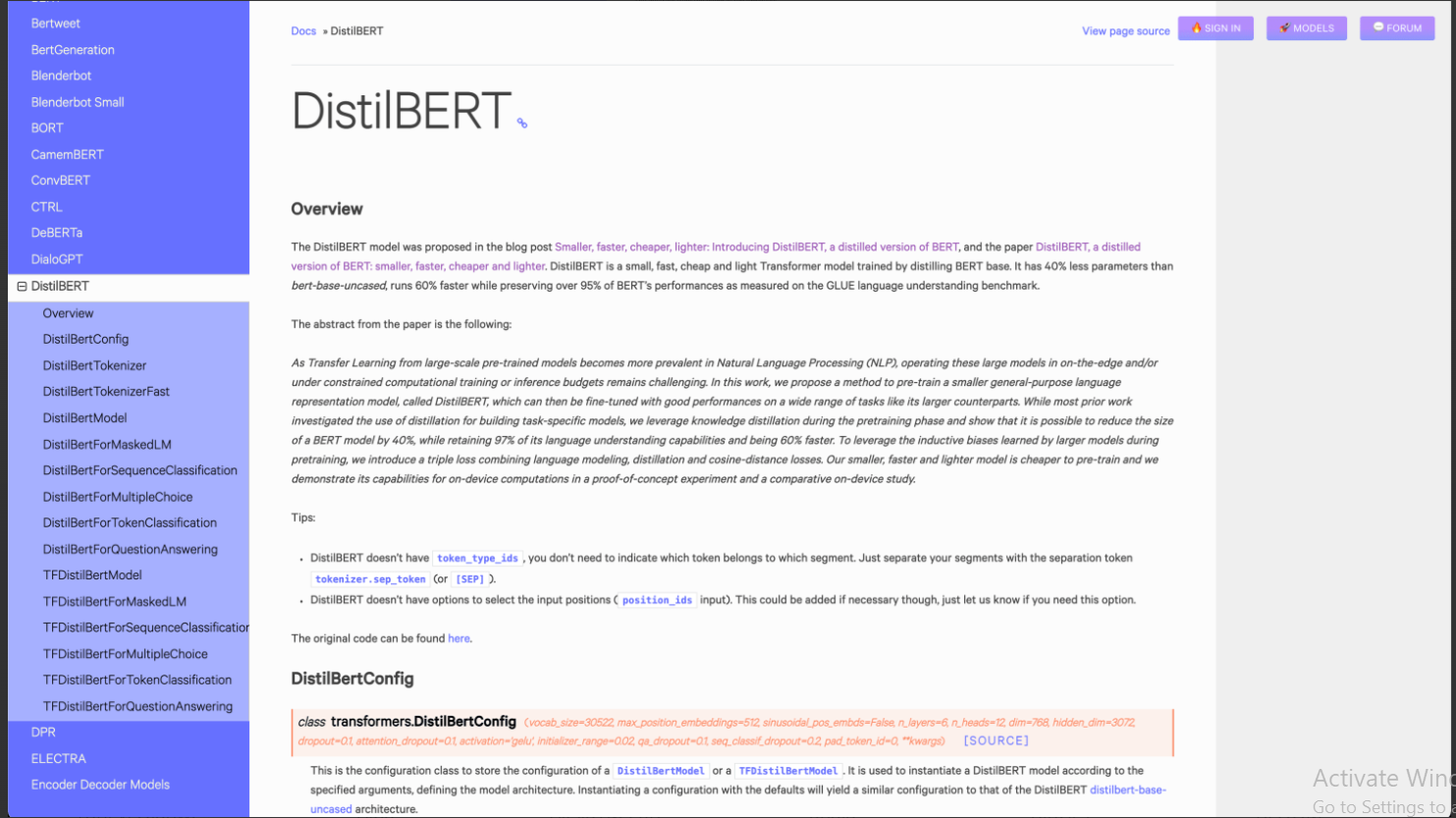

DistilBERT is a condensed BERT model that is smaller, faster, and cheaper. Over 95% of BERT’s performance is retained despite 40% fewer parameters and 60% faster operation. It facilitates transfer learning in natural language processing for computationally constrained situations, as described in a paper and blog post. DistilBERT’s distillation occurs during pretraining, unlike task-focused distillation.

This model is 40% smaller than the standard BERT but retains 97% of its language understanding. The model uses a unique triple loss method for on-device computations, boosting speed and efficiency.

User objects: Researchers, developers, NLP practitioners, businesses with computational constraints, and on-device application developers.

>>> Please use: ChatGPT Free – Version from OpenAI

DEMO

Similar Apps

Openai Codex

nanoGPT minGPT

Muse

MT NLG by Microsoft and Nvidia AI

DeepMind RETRO