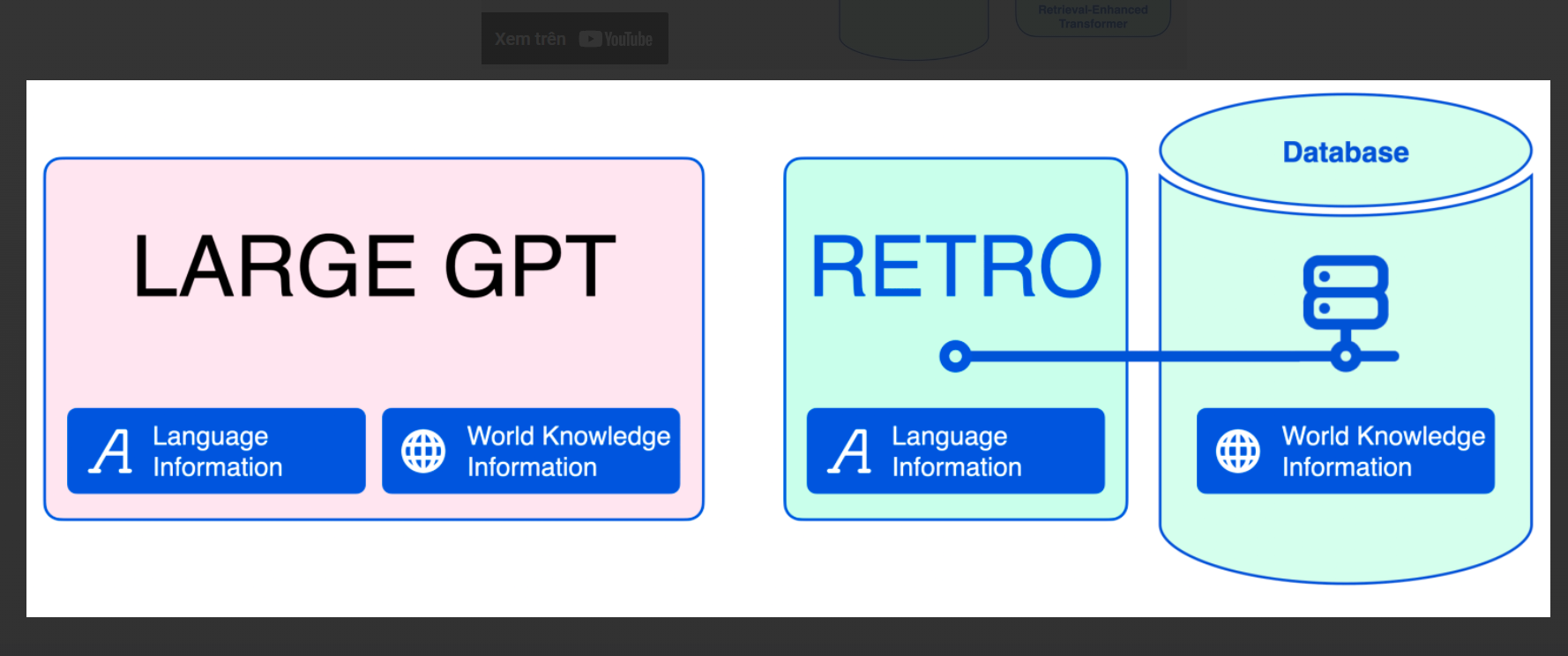

With the help of a large database containing 2 trillion tokens, DeepMind’s RETRO employs a novel method to enhance language models. By obtaining document segments from a large corpus that are chosen based on their similarity to recent input tokens, this method improves auto-regressive language models.

Remarkably, despite using 25 times fewer parameters than advanced models like GPT-3 and Jurassic-1, RETRO performs on par with them on the Pile benchmark. When further developed, RETRO’s abilities can handle more challenging jobs, especially those requiring a depth of knowledge, like answering questions. RETRO essentially shows the effectiveness of utilising massive data retrieval to improve language model performance.

Researchers, data scientists, developers, and professionals in knowledge-intensive fields can use DeepMind’s RETRO.

>>> Use ChatGPT Free Online to make your work more convenient

DEMO

Similar Apps

Replicate | Discover AI use cases

Openai Codex

nanoGPT minGPT

Muse

MT NLG by Microsoft and Nvidia AI