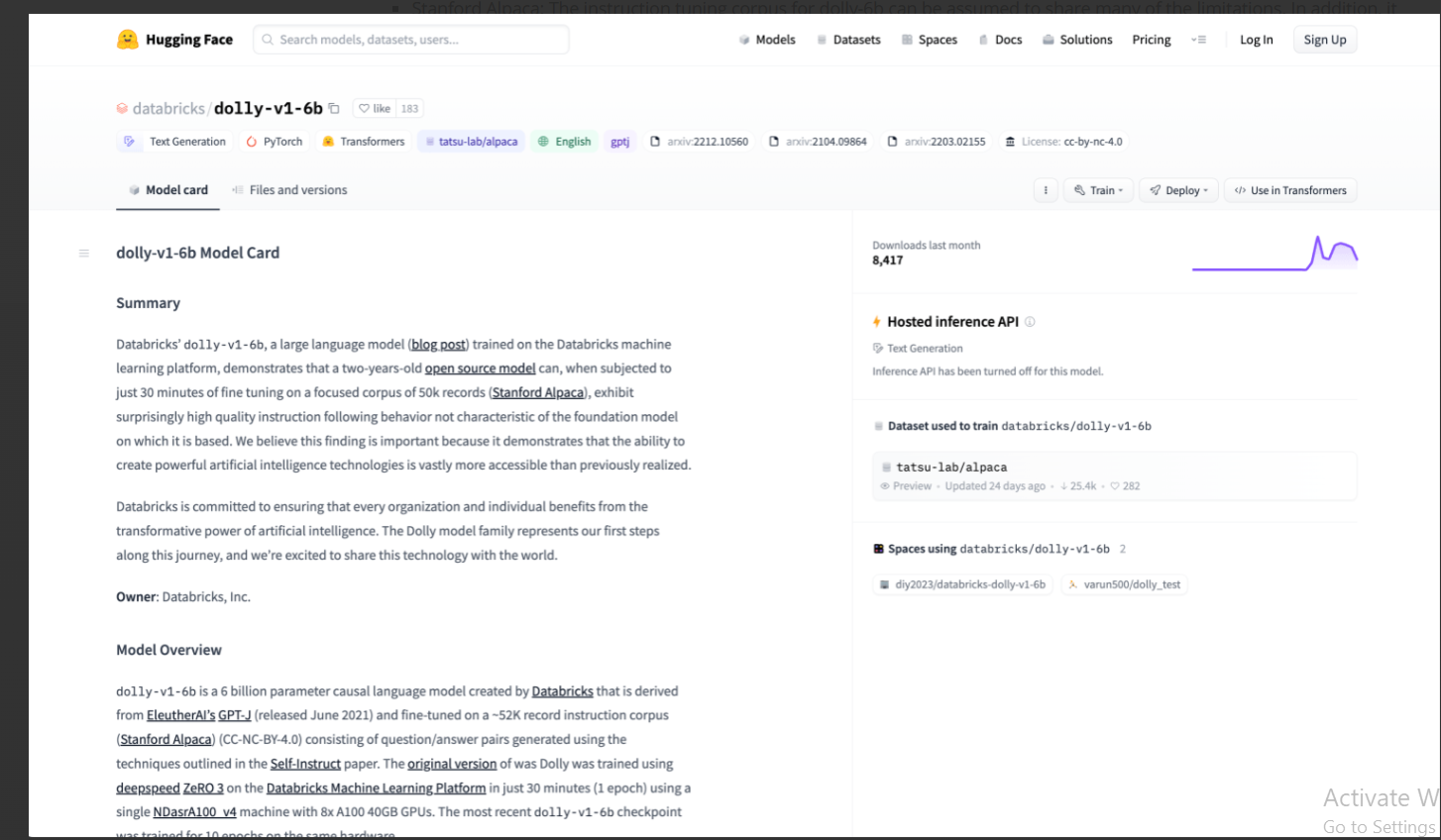

Based on the free and open-source GPT-J is a sizable language model by Databricks called Dolly. It demonstrated high-quality instruction following behaviour not seen in the base model after only 30 minutes of fine-tuning on a targeted corpus of 50,000 records from Stanford Alpaca. This finding proves that developing effective AI technologies is more feasible than previously believed.

Dolly, however, mimics the content and shortcomings of its training data, as do all language models. The outputs of the model reflect the fact that the dataset for GPT-J, which is largely derived from the internet, contains material that might be regarded as objectionable. Similar errors, meaningless responses, and other flaws can be found in Stanford Alpaca’s data, which Dolly might mimic.

Developers, data scientists, researchers, educators, and businesses seeking powerful AI-driven language capabilities.

>>> We invite you to use the latest ChatGPT Demo Free in 2024

DEMO

Similar Apps

Replicate | Discover AI use cases

Openai Codex

nanoGPT minGPT

Muse

MT NLG by Microsoft and Nvidia AI