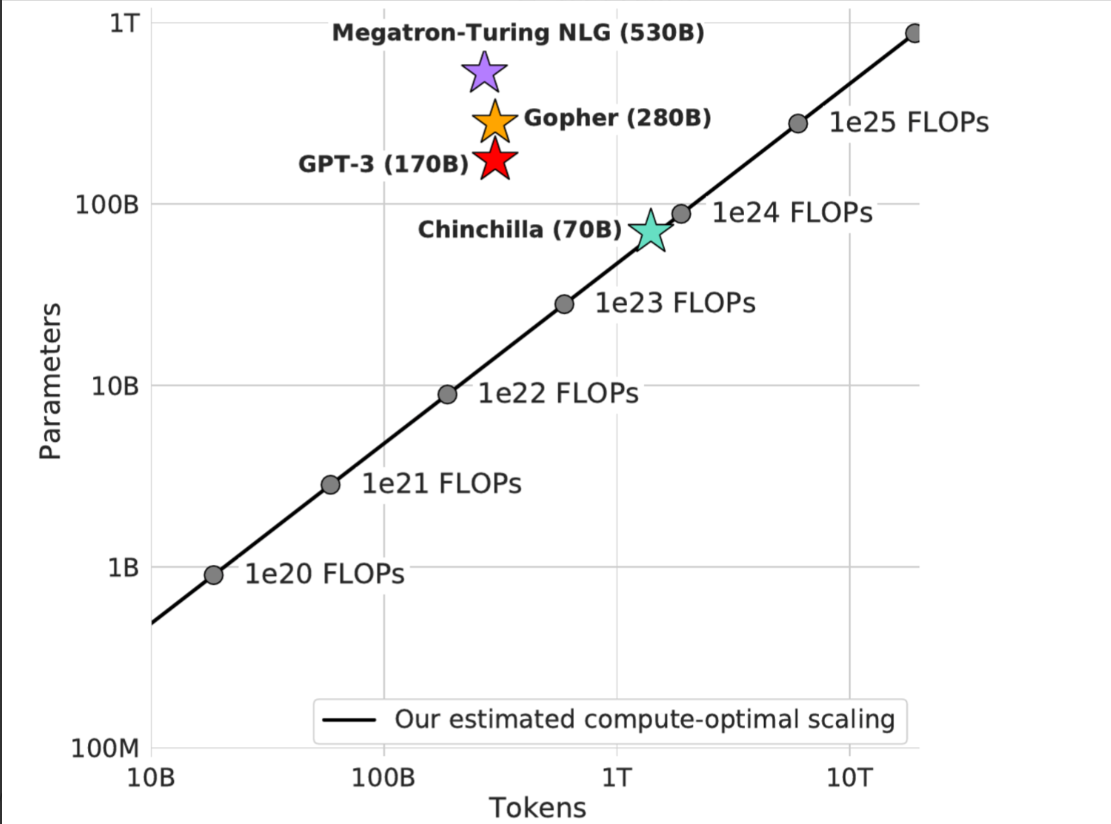

A new language model called “Chinchilla” from DeepMind has been released, taking on rivals like GPT-3. Even though Chinchilla uses the same amount of computing power as the Gopher model, it has 70 billion parameters and processes four times as much data.

It performs better on various evaluation tasks than models like Gopher, GPT-3, Jurassic-1, and Megatron-Turing NLG while using less computing power. Notably, Chinchilla outperformed Gopher by 7%, achieving a top-tier average accuracy of 67.5% on the MMLU benchmark. The expansion of model sizes without necessarily increasing the quantity of training tokens is a growing trend in AI, which is highlighted by this development.

User objects: Researchers, data scientists, AI developers, content creators, educators, and businesses looking for efficient natural language processing applications.

>>> We invite you to use the latest ChatGPT Demo Free in 2024

DEMO

Similar Apps

Openai Codex

nanoGPT minGPT

Muse

MT NLG by Microsoft and Nvidia AI

DeepMind RETRO