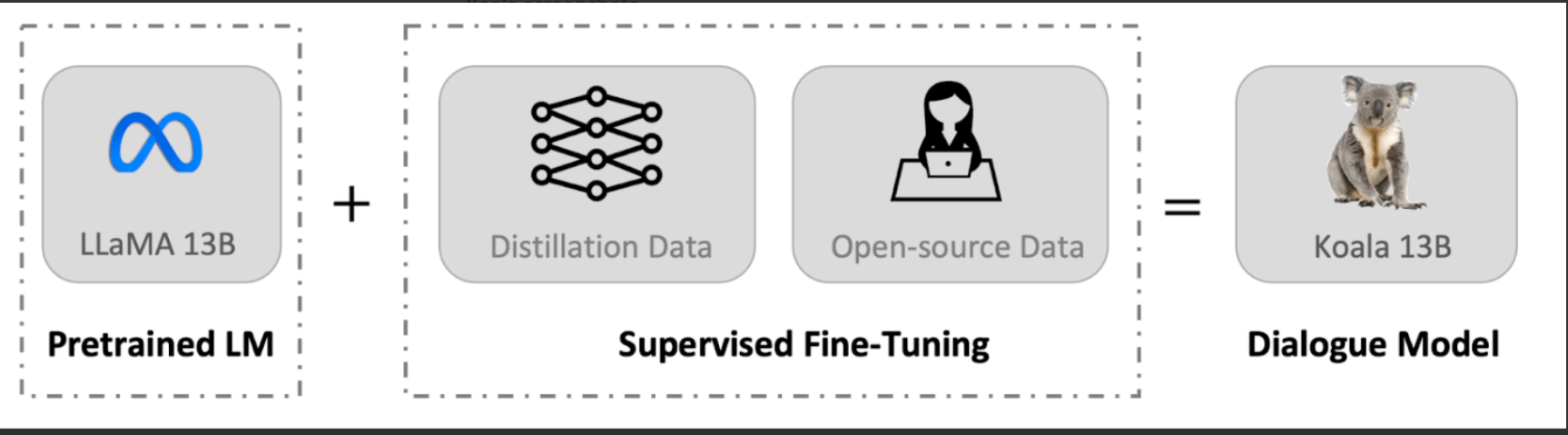

UC Berkeley introduced “Koala,” a research dialogue model. Koala’s user response rivals ChatGPT and often surpasses Stanford’s Alpaca. It was trained by refining Meta’s LLaMA with web-scraped dialogue data, especially ChatGPT responses. The training process involved curating 60,000 high-quality dialogues and filtering them down to 30,000. The model was also enriched with HC3 English and other open-source datasets. A public Koala demo is online.

User objects: Researchers, students, and anyone interested in testing or studying dialogue models.

>>> Use Chat GPT Demo with OpenAI’s equivalent smart bot experience

DEMO

Similar Apps

Openai Codex

nanoGPT minGPT

Muse

MT NLG by Microsoft and Nvidia AI

DeepMind RETRO