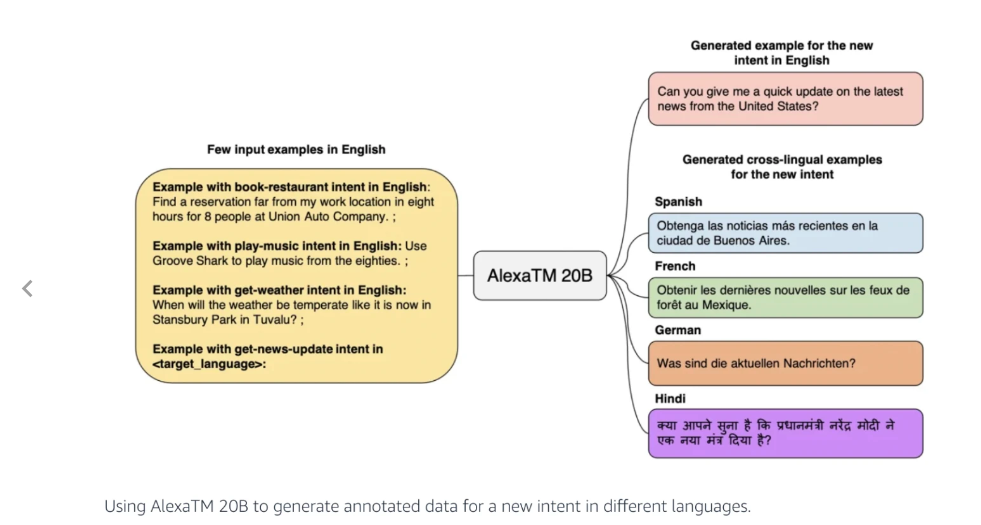

A large-scale multilingual seq2seq model, the Alexa Teacher Model (AlexaTM 20B), excels at 1-shot summarization and machine translation, especially for low-resource languages. It excels in Arabic, English, French, and others. AlexaTM 20B outperforms GPT3 (175B) in zero-shot settings on SuperGLUE and SQuADv2 datasets. It also raises multilingual task standards like XNLI and XCOPA. Results show that seq2seq models can outperform decoder-only models in Large-scale Language Model training.

User objects: Researchers, linguists, developers, translators, educators, and multilingual content creators.

>>> Use ChatGPT Free Online to make your work more convenient

DEMO

Similar Apps

Openai Codex

nanoGPT minGPT

Muse

MT NLG by Microsoft and Nvidia AI

DeepMind RETRO